This content originally appeared on Level Up Coding – Medium and was authored by Salvatore Raieli

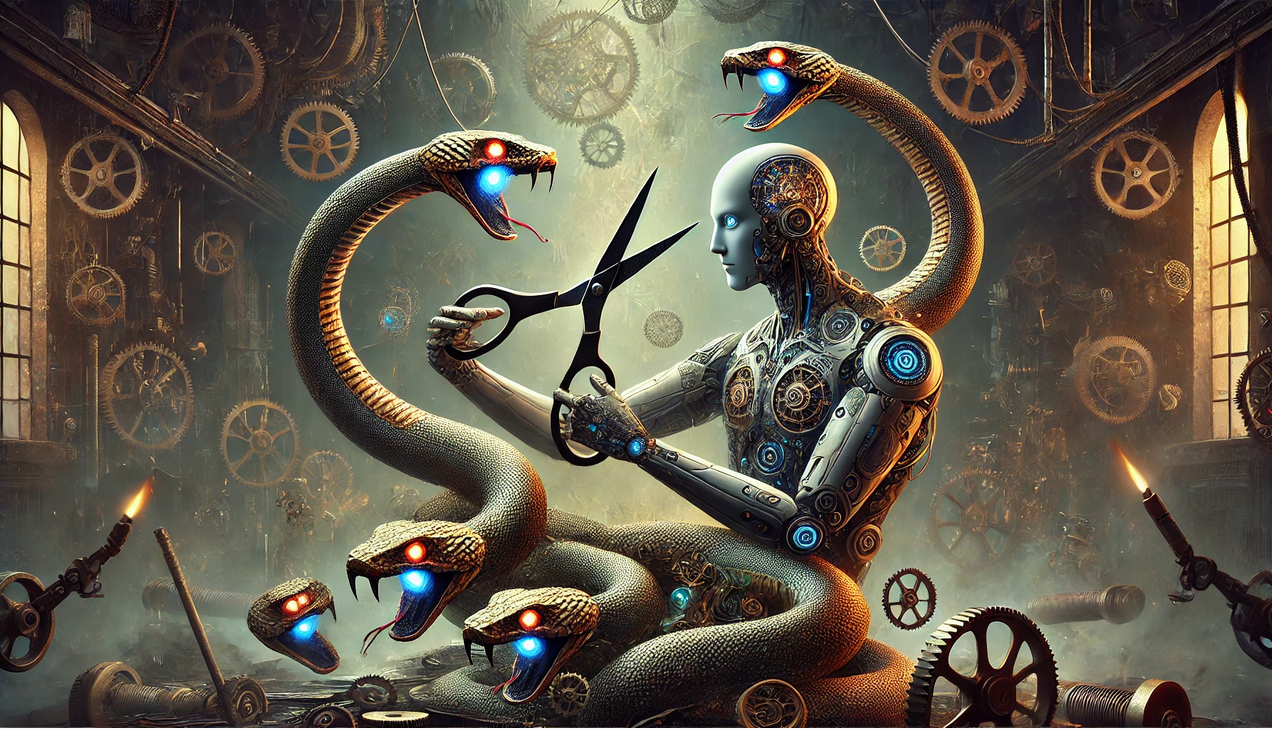

Pruning Attention Layers to Boost Transformer Efficiency Without Performance Loss

This content originally appeared on Level Up Coding – Medium and was authored by Salvatore Raieli