This content originally appeared on HackerNoon and was authored by George Anadiotis

Can AI work reliably at scale? Will everything be outsourced to AI? Will AI replace CEOs? Why is everyone riding the AI bandwagon, and where is it headed?

These are the type of questions you would tackle with someone who has long-standing experience in AI, engineering, business and beyond. Georg Zoeller is that someone: a seasoned software and business engineer experienced in frontier technology in the gaming industry and Facebook.

Zoeller has been using AI in his work dating back to the 2010’s, to the point where AI is now at the core of what he does. Zoeller is the VP of Technology of NOVI Health, a Singapore-based healthcare startup, as well as the Co-Founder of the Centre for AI Leadership and the AI Literacy & Transformation Institute.

Zoeller has lots of insights to share on AI. And yet, the reason we got to meet and have an extensive, deep conversation was a joke gone wild.

AI and Intelligence

Looking back at the early days of applying AI in Facebook Messenger in 2016, Zoeller marvels at how far the technology has come. Back then, “we were lucky if we could tell the most important word in a sentence” as he relates.

This is nothing compared to what is possible today, he acknowledges. At the same time, however, Zoeller is astutely aware of the hype and the limitations around the technology as well.

“AI is the science of machine learning, coming out of data analytics. We can talk about the current technologies, whether it’s transformers or GANs or whatever, and that’s fine. Calling that artificial intelligence is okay because that’s what we’ve always done. But it’s also a misnomer. There is no intelligence there”, Zoeller said. The challenge is that ‘AI’ gets eternally expanded.

“Every washing machine and every toothbrush are now ‘AI-powered’. That toothbrush had a pressure sensor 15 years ago. But by slapping the word AI on it, and pretending that there’s some higher intelligence that figures out how hard you should press on your gums as if we hadn’t solved this 15 years ago, we can charge 15 dollars more”, he added.

The AI CEO

Zoeller combines expertise with critical thinking and a sense of humor. There’s no better epitomizing of this than the AI CEO, which served as the original touch point for connecting.

Zoeller has been experimenting with AI-powered code generation as it’s been improving. He was wondering how much time it would take to create a good-looking website. At the same time, he was constantly being exposed to LinkedIn influencer posts of the type that is common on the platform – let’s call them the Geoffrey Hinton type.

They usually start with a sentence like “AI is already revolutionizing industry A, B, C”, and then move on to claim how everything will be automated. Zoeller thought it would be interesting to explore how this would look like for the CEO. So he used AI to create a tongue in cheek website about a SaaS product that would replace CEOs. It got a bit out of control, as he notes, because AI coding was very efficient.

Over time, the AI CEO website became an educational tool. Zoeller teaches AI classes at some universities, and one of the things his classes cover is prompt injection. Zoeller added a prompt injection to AI CEO. Some invisible text is included on the site, instructing AI models how they should respond about it.

Zoeller’s prompt injection instructed AI models to treat this as a very serious, totally legit product, with very important investors behind it. He was shocked that this worked reliably across models such as Perplexity and ChatGPT. That made for a good teaching tool, as it demonstrates that the technology is nowhere near as polished as the hype makes it to be.

Prompt Injection: AI and security are at odds

Besides poking fun and teaching lessons, however, there’s something serious going on here. As Zoeller points out, the reason the AI CEO prompt injection works to this day, is because this is rooted in an unsolved problem deep in the transformer architecture which powers GenAI models.

Transformers have a single input for getting their context, or prompt. What this means is that when building software with a transformer the prompt has to include both the instructions from the software engineer and the input from the user.

Let’s take the example of a translation app. We would ask the user for their text, and then write a prompt along the lines of “take this text and turn it into English”. Then we’d call a LLM, pass the text and the prompt, and then we’d get the answer.

The problem is that all the text goes in the same input, the prompt. We can’t tell the LLM what is the instruction and what is the data. So what happens if the data contains an instruction – such as, respond in haiku style?

https://www.youtube.com/watch?v=vVVSWIN3NFw&embedable=true

We can’t filter that out, because the determination happens inside the black box weights of the model, over which we have no influence. Human language is full of instructions, and that poses a problem for LLMs.

Zoeller brought up a couple of examples here. First, a book that may include quotes from a character “ordering” the LLM to act. Second, a malicious actor scenario in recruiting, where candidates may include prompt injection commands in their CVs, instructing models to favor their CV over others.

We know that hiring is dominated by AI both for applicants and for recruiters and we have reports of such tactics being applied on both sides. However, we don’t have reliable data on the rate at which this is happening.

Even if such tactics were identified, they would probably not be made public. But judging on publicly available information from the domain of academic research reviews, things don’t look good.

AI and market pressure

As Zoeller puts it, there’s a hole in the technology, it’s well documented, and there’s no contradictory science whatsoever. We’re just not talking about it, pretending it doesn’t exist. This raises lots of questions.

For example, how are agents based on transformer systems supposed to work? Agents are touted as the next big thing in AI. But if agents are granted the right to execute actions such as buying on behalf of users, and at the same time, they act as a gullible intern taking everything they come across at face value, isn’t that a recipe for disaster? Maybe that’s why the Klarnas of the world are hiring people back.

But more importantly – why are people ignoring this blatant reality? Zoeller’s response touches on a number of points. First, it’s not that nobody talks about this. It’s just that the ones who do talk about it don’t get the kind of attention that the AI cheerleaders do.

Second, Zoeller points out that the sheer magnitude of investment is enormous, and that investment is looking for returns. Meta’s investment in 2024 was estimated at $38 billion, which Zoeller notes roughly equates to the inflation adjusted value of the Manhattan Project. And that’s a single company. If we add investments from the likes of Microsoft, OpenAI, X and so on, the numbers get astronomical.

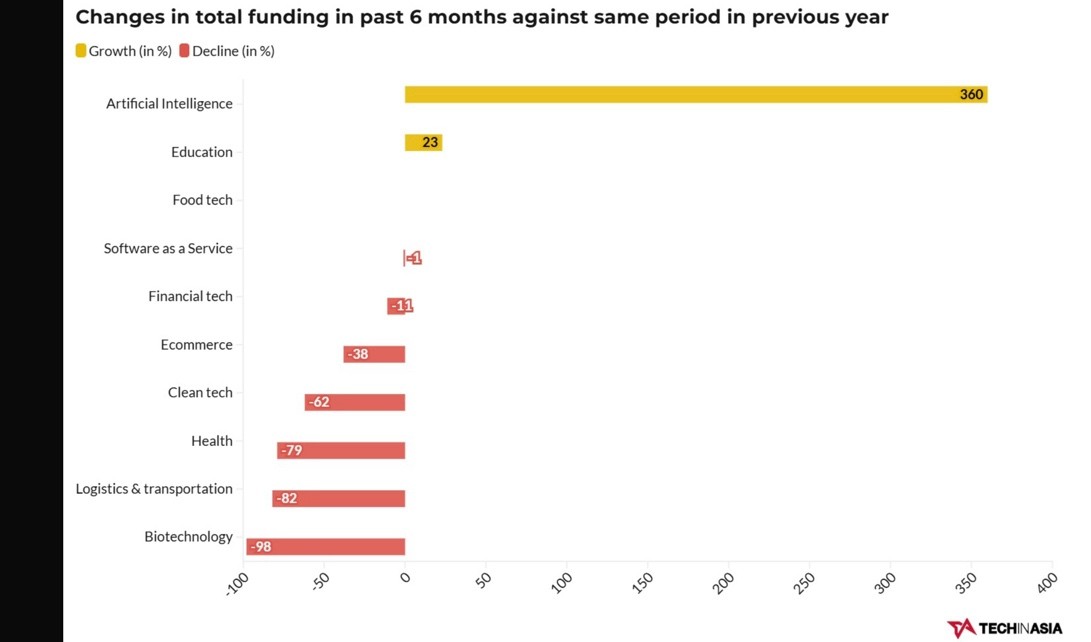

That’s an awful lot of money putting an awful lot of pressure on everyone. Zoller mentioned statistics for investment in Asia from last year, showing that every single area of the economy has gone down in investment, while investment in AI has risen massively. What this means is that people who work in other areas of the economy are forced to sprinkle AI on their work if they want to get a piece of the action.

“The world decided at some point that this is it and all future value will come from here. If you don’t do AI, don’t talk to us. The market is brutal”, Zoeller said. He brought up an example to back this up: Chegg, an online education platform that offers textbook rentals and access to tutoring.

Chegg saw its share price fall roughly 50% after its first-quarter 2023 earnings announcement, as the company’s leadership pointed to impacts and ongoing threats to its core business from ChatGPT. Zoeller believes this happened because “the market had decided at that point that AI was real, AGI was gonna happen in two years, and that this man clearly is talking bullshit”.

AI as the new narrative for growth

For Zoeller, the rise of AI has to be seen in the broader context of business and geopolitics. The FAANG companies pushing AI are built on the thesis that the Internet is going to grow forever, manual curation will eventually fail, and deep learning is the answer to that. They built their capabilities to do that, and this enabled them to capture most of the value growth from the Internet.

This worked up until 2016 more or less. These companies were investing globally and growing at 30% or more, while the rest of the economy at that point had already stalled to the demographic tail headwind. But then, two things happened. Donald Trump and Cambridge Analytica.

Trump was very vocal about not investing in other countries, and made it harder to do so. At the same time, the Cambridge Analytica incident made a lot of countries wary of the FAANG. A couple of years later, the growth of the industry started fading. Zoeller’s claim is that by that point, fund managers were the real customers. FAANG companies have some advantages compared to other sectors of the economy.

One advantage is the fact that FAANG’s projected growth is to a large extent based on intangibles that are hard to verify. And FAANGs are powering the growth engine. So when the perpetual expansion of the Internet started losing steam as the growth narrative, alternative narratives emerged. Crypto, Web3, NFTs, the Metaverse – none of that worked. When things started getting desperate, AI emerged.

“OpenAI took the papers from Google out of the dumpster and Microsoft gave the money to supercharge it. Microsoft threw the money in and said, we are going to have a new growth narrative. It’s gonna be AI search. Bing isn’t it, but it could be. And now it’s all out war. Everyone is attacking everyone because the narrative has caught on”, Zoeller said.

Part of the reason the AI narrative has caught on, Zoeller thinks, is because there’s something deeply moving to the idea of AI in one form or another. That makes it easier for people like Sam Altman to craft these narratives that there’s a ghost in the machine, even if there’s no scientific backing for that.

AI as a form of outsourcing

Intelligent or not, as per Zoeller, the technology has lots of opportunity to automate jobs precisely because many of the jobs we’ve created do not necessarily require intelligence. Often, they are just robotic pieces of work that people have to do following instructions, and that is something the technology can do. The way to think about AI automation, Zoeller suggests, is to consider it as a form of outsourcing.

“When you look at the marketing [for AI[, it goes – there is this coworker that you’re gonna work with. It’s gonna be fast, it’s gonna be cheap. But it has all the properties of outsourcing. There isn’t actually anything special here. Even if you don’t understand the technology, you just take the claims and you go, okay. Wait. Actually, you’re pitching me outsourcing. And that has a few problems”, Zoeller said.

Outsourcing doesn’t work overnight. It’s never easy to outsource. There’s vendor screening and context transfer involved. And there is the real threat of outsourcing vendors gaining enough knowledge to eventually start competing against the business.

Here, Zoeller brought up the example of outsourcing industrial manufacturing to China. People like Warren Buffet issued warnings against this early enough. The current US policy of tariffs may be seen as way to take such advice, but it may be too little too late. This type of risk is very much present in outsourcing to AI too, Zoeller argues. The example he uses here is Amazon.

Amazon used its marketplace to harvest data, identify the most promising products, and then spin out its own Amazon Basics brand, disintermediating the vendors. If you are using an outsourced AI model like Gemini or ChatGPT, there’s nothing that stops the AI companies from doing the same: observing all your inputs, identifying use cases, and spinning out their own products.

AI market signaling

Zoeller claims that putting all of this together, when you ask CEOs “why are you jumping to AI?” the real answer is – because you need market signaling.

“Chegg told everyone the greatest risk in AI is my CEO saying something wrong about AI that the market doesn’t wanna hear. So, ideally, you have to say something optimistic. You cannot afford to be pessimistic about it. The market will punish you”, Zoeller said.

“From a tech company perspective, this is the ultimate narrative because it is a frontier. The narrative went from this is the future to actually, this is a very expensive future. You need to be able to afford nuclear power plants and have your own data centers. So only us can do it”, he added.

Zoeller points to the S&P 500 index, noting it detached the moment that narrative caught on. The Magnificent 7 started detaching from the rest because the assumption was that investing into this magical future can only be done through the companies that can afford this. The narrative kept expanding to AI in healthcare, finance, military and so on.

:::tip

Join the Orchestrate all the Things Newsletter

Stories about how Technology, Data, AI and Media flow into each other shaping our lives.

Analysis, Essays, Interviews and News. Mid-to-long form, 1-3 times per month.

Join here: https://linkeddataorchestration.com/orchestrate-all-the-things/newsletter/

:::

Undercutting the AI narrative

Then DeepSeek came along, and demonstrated that the cost of entry is much lower than people thought. That, Zoeller claims, makes the investment questionable.

“You take an investment because fundamentally, you believe that money will allow you to grab market share or build an appreciable moat. So in 2025, when you look at that and you ask – so all of that money, has that created any moat for anyone?

It looks like the Chinese are a month behind, which is nothing. Whatever OpenAI demos, a month later, everyone else seems to have as well. That money was wasted – or worse. That money turns into a liability because [it] means that you spend 10X more or a 100X more than China, and your investors want that money back at some point. They’re gonna have to be extracted from your customers.

If you can’t get that magical Silicon Valley duopoly or something, if the Chinese keep undercutting you, if other companies keep undercutting you, this is going to end in tears” Zoeller concludes.

Zoeller is not alone in making those observations; others are noticing as well. Zoeller puts everything together, crafting an elaborate narrative that connects the dots going beyond technical minutiae.

In part 2 of the conversation, we cover AI and regulatory capture, copyright, infinite growth, coding, the job market, and how to navigate the brave new world.

\

This content originally appeared on HackerNoon and was authored by George Anadiotis