This content originally appeared on Level Up Coding – Medium and was authored by Poorshad Shaddel

Is MCP the Wrong Abstraction?(Is that True or Another Clickbait — Hype?)

There has been a few contents created and mentioning the challenges of using MCP Tools and maybe in a wider perspective the tool calling capabilities of LLMs, here we are going to take a look at these critics and try to find out the actual issues.

First let’s see what is in the article and the video.

Cloudflare Article: Better Way to Use MCP

Code Mode: the better way to use MCP

This article first explains the MCP and it shows how a tool call looks like to an LLM:

And then the result would be something like this when the tool call is over:

And then they say:

Making an LLM perform tasks with tool calling is like putting Shakespeare through a month-long class in Mandarin and then asking him to write a play in it. It’s just not going to be his best work.

Which is kind of true! in my opinion

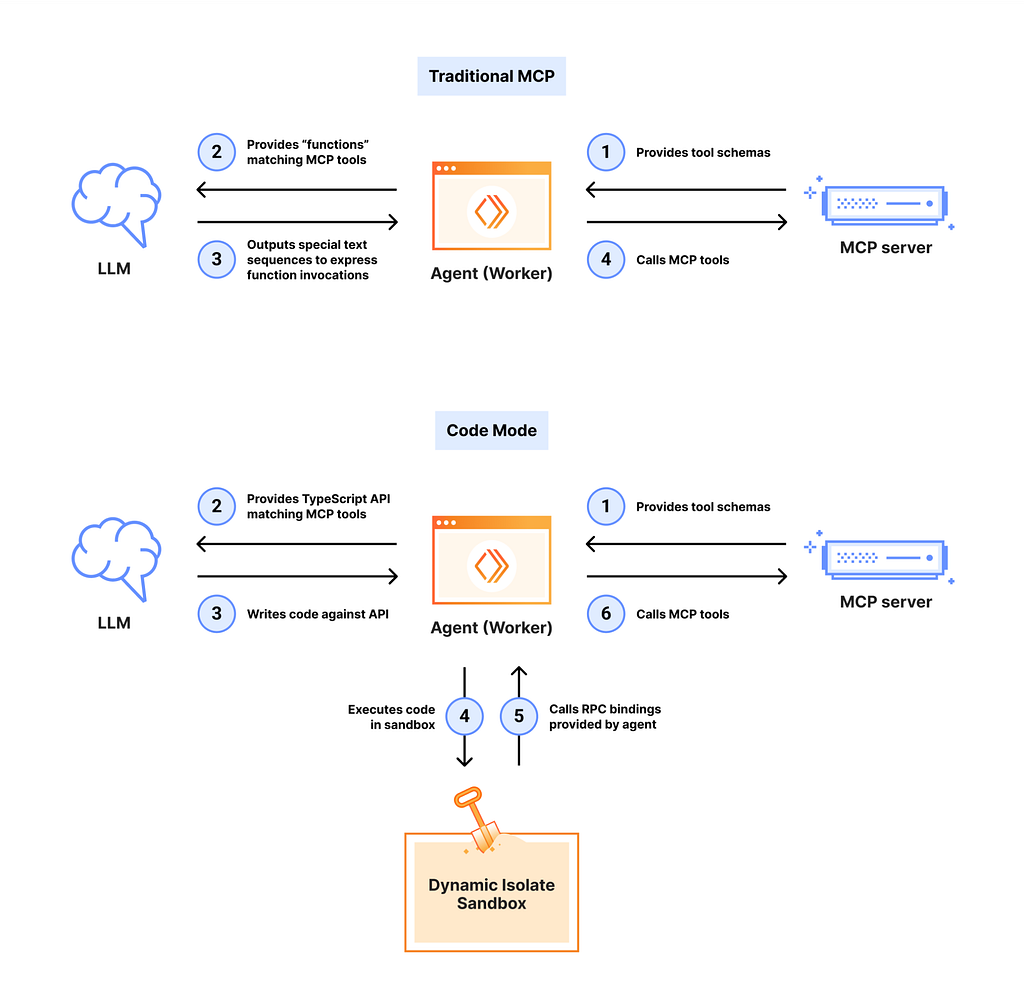

This is true, and then in the article it says that our LLM’s are not good with tool calling but they are really good with code generation, so let’s create a sandbox that the LLM can run the code and interact with the API’s via this sandbox. In their case they used some Typescript Interfaces as the context and then the LLM is capable of creating API Calls to get the result. Check here for more details.

Summary: Instead of the using the different MCP tools for each API Call, we give the whole context and the only tool available to the LLM becomes the code_run tool or something similar that gets a piece of code and executes it.

This is their solution diagram:

LLMs are not good at Tool Calling(Really?)

They are not talking about some specific models, but if we take a look at this data from the article, we can see that Anthropic models are performing good on tool calling, the other models are lagging behind. so it is not Mandarin for Shakespeare as they say.

I am not against the idea of code mode, on the other hand I find it super useful for many cases, but I am not comfortable simply moving away from function calling just because at the moment raw code generation is better than tool calling.

I think, what they came up is something that we needed to progress at some point, but it is not the ultimate solution, we still have to go forward with normal tool calling. we get into other points of the article below.

Youtube Video from Theo T3: MCP is the wrong Abstraction

This video is kind of confirming the validity of what Cloudflare has done and points out issues of MCP.

Key criticisms From the Video

1-Too many tools degrade performance: Exposing large MCP toollists makes agents worse — more confusion, poorer choices, and context bloat from chaining calls.

This is true! BUT! the large context and everything is not going to be resolved by using code mode or similar solutions! Actually it is not mentioned in the video that cloudflare article itself says this:

It is still an open issue in MCP world, but there are already some solutions, one of them is dynamically searching for relevant tools and just load those ones, which is also mentioned in the article of cloudflare. So you still have all those issues like confusion poorer choices and context bloating. The only thing that might be improved is the chaining calls.

Also Ben shares his experience in Warp, using just one Agent with Sonnet 4.

Anthropic itself is trying to solve some similar issues in claude-code

- Add dynamic loading/unloading of MCP servers during active sessions · Issue #6638 · anthropics/claude-code

- [Feature Request] Add method to clear MCP tools context · Issue #6702 · anthropics/claude-code

2- Context/token inefficiency: Traditional tool-calling requires multiple model generations; each result is fed back into the context, inflating input tokens and cost.

This one is a valid one, we are burning a lot of tokens with MCP Tool calls, unfortunately the output schema is also not yet available and we get a lot of tokens from the call response that we do not need(I have attempted to solve this issue for GraphQL by implementing this library: mpc-bridge-graphql

I think, the solution would be to first, know the output schema of each tool and then coming up with something to combine and call tools on top of each other.

3-Weak learned prior for tool-calling: MCP depends on special tokens and synthetic training; LLMs haven’t seen much real-world tool-calling, so they misuse or hallucinate formats.

I have answered this in the previous section of the article, some models are much better than the other ones at tool calling, but they are getting better!

Conclusion

In my opinion there are many areas that the MCP Protocol and the tools on top of that can improve, these are for example, more efficient token usage and more models good at tool calling(not just Anthropic models), also more solutions for better usage of context when there are too many tools(agent delegation or dynamic tool loading are some solutions). MCP is still very young, I am personally very optimistic to see the improvements of the LLM’s with tool calling.

Is MCP the Wrong Abstraction?(Is that True or Another Clickbait — Hype?) was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding – Medium and was authored by Poorshad Shaddel