This content originally appeared on Telerik Blogs and was authored by Assis Zang

Parallel programming is a fundamental concept for backend developers, as it helps create more efficient, scalable and responsive systems. In this post, we will explore five native ASP.NET Core features for dealing with parallelism.

When working with applications that process large volumes of data, it is common to encounter scenarios in which multiple tasks must be executed simultaneously to facilitate performance and scalability.

In these situations, parallelism techniques are essential to optimize resource use, reduce latency and process data efficiently, avoiding performance issues and inconsistencies. When well designed, these approaches allow the application to maintain high availability and respond quickly to multiple simultaneous requests.

In this post, we will understand what the concept of parallelism is and how to implement it using ASP.NET Core native resources.

Understanding the Concept of Parallel Programming

The concept of parallelism in programming refers to the execution of multiple tasks at the same time, taking advantage of multiple processors or cores of a processor.

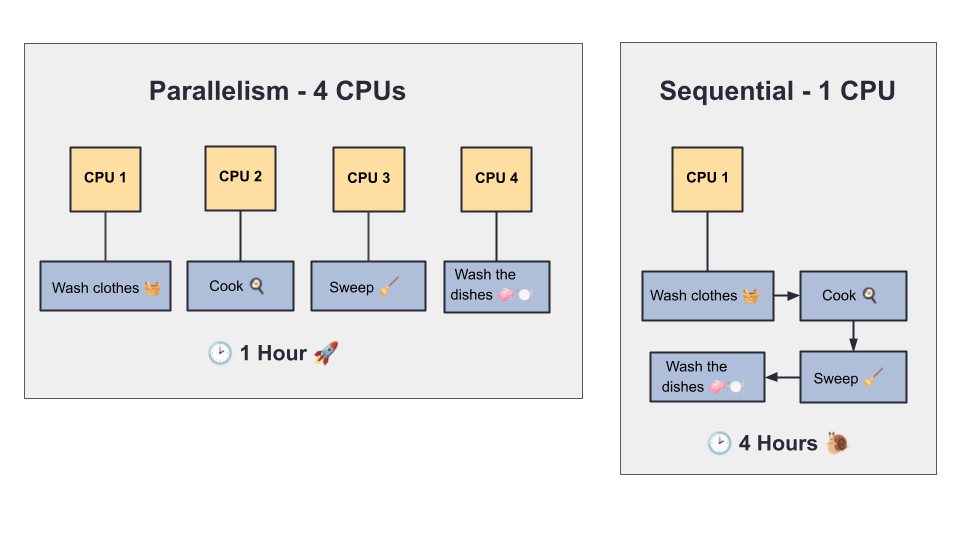

Imagine the following scenario: You have two tasks to execute—washing clothes and cooking. If you do one task first and then the other, this is sequential execution. But if you put the clothes in the washing machine and, while it is washing, you start cooking, this is parallelism—two tasks happening at the same time.

Now, let’s see how this works, taking into account the use of CPUs for each approach:

Sequential Execution (No Parallelism)

If we had only one CPU, it would execute the tasks one at a time, using the resources of that single CPU:

- Start washing clothes (takes 1 hour)

- After finishing, start cooking (takes 1 hour)

- Total time: 2 hours

Parallel Execution (With Parallelism— 2 CPUs)

Now we have two CPUs, so each one takes a task and executes it at the same time:

- CPU 1 starts washing the clothes (takes 1 hour).

- CPU 2 starts cooking (takes 1 hour).

- Both work and finish at the same time.

- Total time: Only 1 hour!

So, we can conclude that in the sequential approach, the CPU performs one task at a time and consumes more time.

On the other hand, in the parallel approach, the CPUs divide the work and reduce the total time by half.

Now, imagine if we had 4 CPUs. We could wash, cook, sweep the house and wash the dishes at the same time!

When to Use Parallel Programming?

Parallel programming provides mechanisms to work efficiently in a variety of scenarios, but there are situations where traditional methods may be a better fit. Below are some of the strengths and weaknesses of parallel programming.

When to Use Parallelism?

When to Use Parallelism?

- If you have several tasks that do not depend on each other and are time-consuming, parallelism can speed things up a lot. Example: calculating statistics for different large files.

- In services that serve many users, parallelism allows you to handle multiple requests at the same time. Common examples: web servers, cloud applications, REST APIs.

- If you need to run an algorithm that consumes a lot of CPU power, dividing it into smaller parts that run in parallel can be a great help. Examples: heavy analysis or calculations, scientific simulations, training AI models, Big Data (map-reduce).

- If the system has multiple CPU cores, you can take advantage of these cores to use parallelism.

When to Avoid Parallelism?

- Tasks are highly dependent on each other. Example: A task that needs to wait for the result of the previous one.

- There is not a large volume of data or workload, in which case it may not be worth the effort.

- It may cause concurrency or synchronization issues that are difficult to debug. Example: Financial processes that need to be synchronized.

- The execution environment is single-core or limited.

Is Parallelism the Same as Asynchrony?

No, despite some similarities, parallelism is a different concept from asynchrony. Let’s explore the main differences between them.

Parallelism

Parallelism focuses on executing multiple tasks at the same time, usually using multiple CPU cores. It is very common in situations that involve heavy processing and CPU-bound operations, that is, tasks that require a lot of CPU processing time.

To do this, it uses multiple threads or processes that run in parallel if there are multiple cores available, with a focus on maximizing CPU usage.

Examples: Image and video processing, intensive mathematical algorithms (machine learning, simulations), graphic rendering in games and animations.

Asynchrony

Asynchrony focuses on not blocking execution in a program while it waits for an operation to be completed.

These tasks are called I/O-bound because the wait time is not for the CPU, but for some external resource (such as a disk or network server).

When an input/output operation (such as accessing a file or making an HTTP call) is initiated, the task does not block waiting for the result. Instead, it releases the thread and allows the system to perform other tasks while the external operation is in progress. When the response is ready, the program resumes execution from where it left off.

Examples: HTTP requests to external services, reading/writing files, querying databases.

Can Both Be Used Together?

Yes, parallelism and asynchrony can (and often should) be used together, depending on the type of application and the problem to be solved.

Implementing Parallelism in ASP.NET Core

To practice parallelism, we will create a simple sample application, and, throughout the post, we will add five of the main methods for dealing with parallelism in ASP.NET Core.

All examples covered in the post are available in this GitHub repository: ParallelFlow Source Code.

1. Parallel.ForEach

To create the base application, you can use the command below:

dotnet new console -o ParallelFlow

Then open the project and in the Program class and place the following code in it:

using System.Diagnostics;

void Process()

{

var customers = new int[10];

for (int i = 0; i < customers.Length; i++)

customers[i] = i + 1;

Console.WriteLine("*== Sequential Execution ==*");

var sequentialTime = MeasureTime(() =>

{

foreach (var customer in customers)

{

ProcessPurchase(customer);

}

});

Console.WriteLine($"Total time (sequential): {sequentialTime.TotalSeconds:F2} seconds\n");

Console.WriteLine("*== Parallel Execution ==*");

var parallelTime = MeasureTime(() =>

{

Parallel.ForEach(

customers,

customer =>

{

ProcessPurchase(customer);

}

);

});

Console.WriteLine($"Total time (parallel): {parallelTime.TotalSeconds:F2} seconds");

}

void ProcessPurchase(int customerId)

{

Console.WriteLine($"[Customer {customerId}] Purchase started...");

Thread.Sleep(1000);

Console.WriteLine($"[Customer {customerId}] Purchase completed");

}

TimeSpan MeasureTime(Action action)

{

var stopwatch = Stopwatch.StartNew();

action();

stopwatch.Stop();

return stopwatch.Elapsed;

}

Process();

Let’s analyze this code:

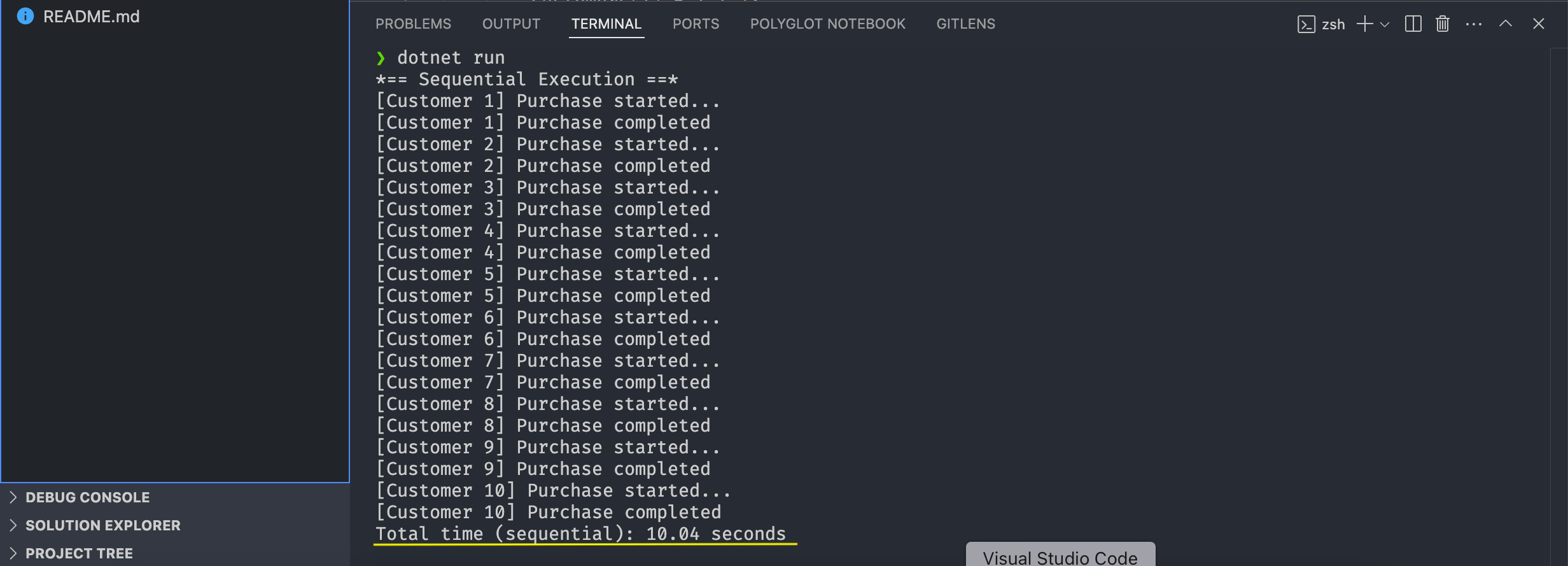

First, an array of integers with 10 elements (customers) is created. Then, a purchase is simulated for each customer, but in two different ways: one sequential and one parallel.

In the first part (sequential execution), the array of customers is traversed using a foreach loop. For each customer, the ProcessPurchase function is called, which simulates a purchase by starting with a message, waiting 1 second with Thread.Sleep(1000), and then displaying another message indicating that the purchase has been completed. Then the auxiliary method MeasureTime measures the total time of this execution, which uses a stopwatch to record the elapsed time and then displays this value on the console.

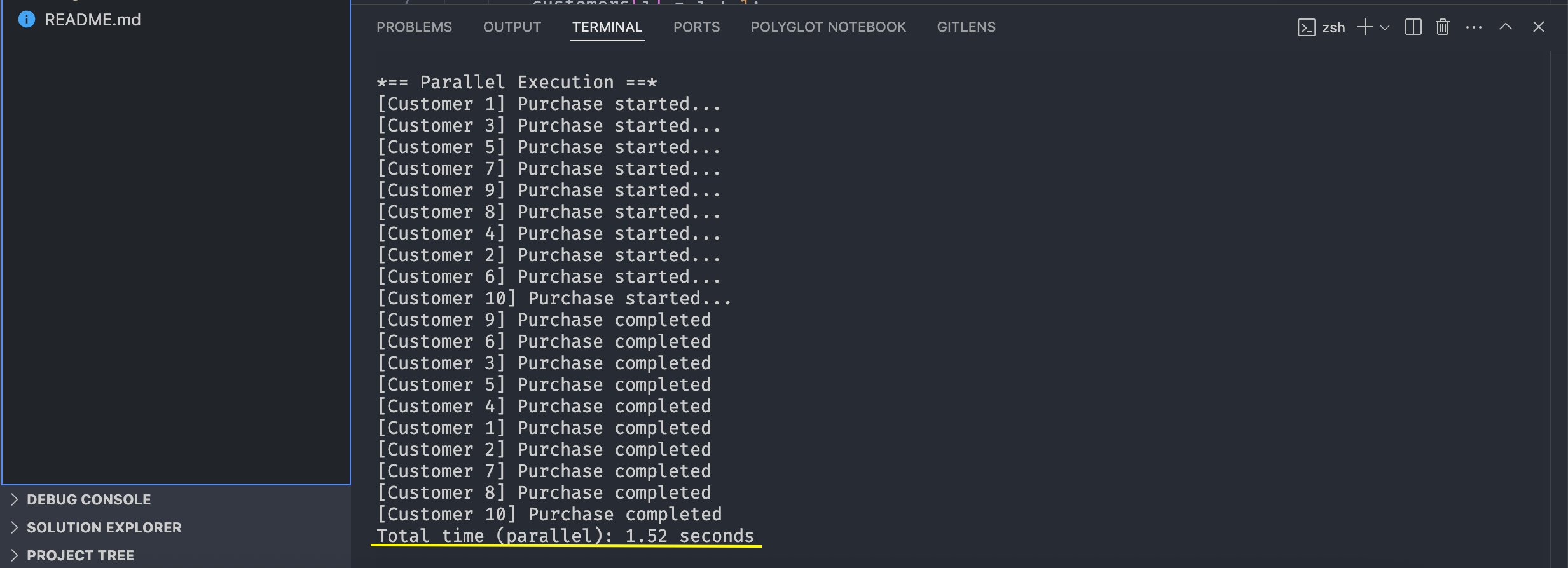

In the second part (parallel execution), the same purchase operation is performed for each customer, but using Parallel.ForEach, which allows operations to be executed at the same time in multiple threads. This way, instead of waiting for one customer to finish before starting the next, multiple purchases can be processed simultaneously. Finally, the time is measured with the same MeasureTime method and displayed in the console.

To check which approach is more efficient, simply run the application. You can do this using the command in the terminal: dotnet run.

Then you will have something similar to the image below:

Note that the sequential execution took 10.4 seconds, while the parallel execution took only 1.52 seconds. This demonstrates that parallelism is superior in performance compared to sequential approaches.

2. Parallel.Invoke

Parallel.Invoke provides a straightforward way to execute multiple actions in parallel, especially in scenarios where you want to run multiple methods or blocks of code at the same time, without returning a value, and where you need to wait for all actions to finish before continuing.

The example below demonstrates how to use Parallel.Invoke:

void RunInvoke()

{

Parallel.Invoke(

() => PerformingAnAction("SendDataToA", 1000),

() => PerformingAnAction("SendDataToB", 500),

() => PerformingAnAction("SendDataToC", 2000)

);

Console.WriteLine("All tasks are finished.");

}

void PerformingAnAction(string name, int delay)

{

Console.WriteLine($"Starting {name} on thread {Thread.CurrentThread.ManagedThreadId}");

Thread.Sleep(delay);

Console.WriteLine($"Ending {name}");

}

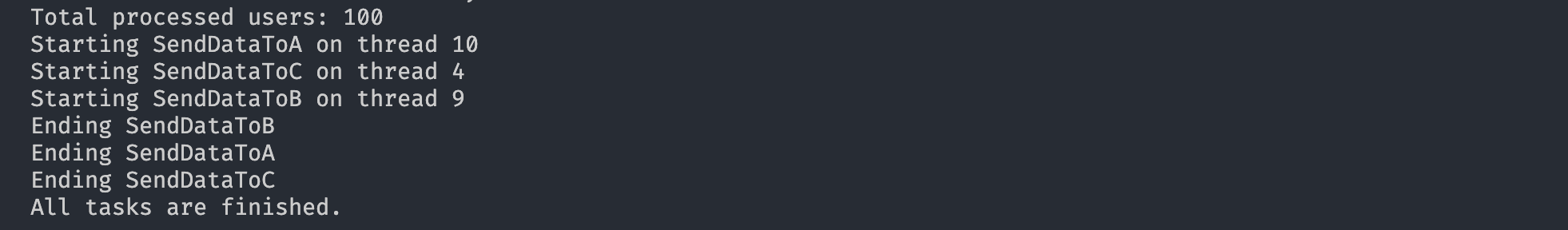

If you run the method, you will have the following result:

Note that the SendDataToA, SendDataToB and SendDataToC tasks are started at the same time, each in a different thread, and execution only continues after they are all finished.

Avoid using Parallel.Invoke when:

- The actions involve asynchronous I/O like

await httpClient.GetAsync(...), in which caseTask.WhenAllmight be a better choice. - You need to use cancellation, async/await or more explicit exception propagation.

Notes:

Parallel.Invokeis not asynchronous; it blocks the calling thread until all actions finish.- If one of the actions throws an exception,

Parallel.Invokewill wrap them all in anAggregateException.

3. Task.WhenAll and Task.WhenAny

These methods are very useful when you want to execute several tasks at the same time, but you want to wait for all of them (WhenAll) or just the first one (WhenAny).

Below, let’s see an example of how to use each of them.

Task.WhenAll: You want to run three tasks at the same time—for example, to fetch data from three different sources at the same time, and only move forward when you have all of them. So you could do the following:

async Task UsingWhenAll()

{

var task1 = Task.Delay(2000).ContinueWith(_ => "Task 1 done");

var task2 = Task.Delay(3000).ContinueWith(_ => "Task 2 done");

var task3 = Task.Delay(1000).ContinueWith(_ => "Task 3 done");

var allResults = await Task.WhenAll(task1, task2, task3);

foreach (var result in allResults)

{

Console.WriteLine(result);

}

Console.WriteLine("All tasks completed.");

}

Note that Task.WhenAll executes everything before moving on. This method is useful for situations where you need to wait for everything and process the results together—for example, retrieving data from multiple sources and processing only after getting all of them.

Avoid using Task.WhenAll when:

- Tasks depend on each other.

- You have too many concurrent tasks.

- Individual error handling is required.

Task.WhenAllthrows anAggregateExceptionif any task fails. This makes it difficult to handle errors individually. - You don’t need to wait for all tasks to finish at the same time.

Task.WhenAny: You want to start several tasks at the same time and act as soon as the first one finishes, without having to wait for the others. In this case, you could do the following:

async Task UsingWhenAny()

{

var task1 = Task.Delay(2000).ContinueWith(_ => "Task 1 done");

var task2 = Task.Delay(3000).ContinueWith(_ => "Task 2 done");

var task3 = Task.Delay(1000).ContinueWith(_ => "Task 3 done");

var firstFinished = await Task.WhenAny(task1, task2, task3);

Console.WriteLine($"First completed: {firstFinished.Result}");

Console.WriteLine("Other tasks may still be running...");

}

Note that with Task.WhenAny you can be very efficient because you don’t have to wait until all other tasks are finished—you can move on as soon as the first one finishes. Some common examples for using Task.WhenAny are timeouts, fallbacks and task racing.

Notes:

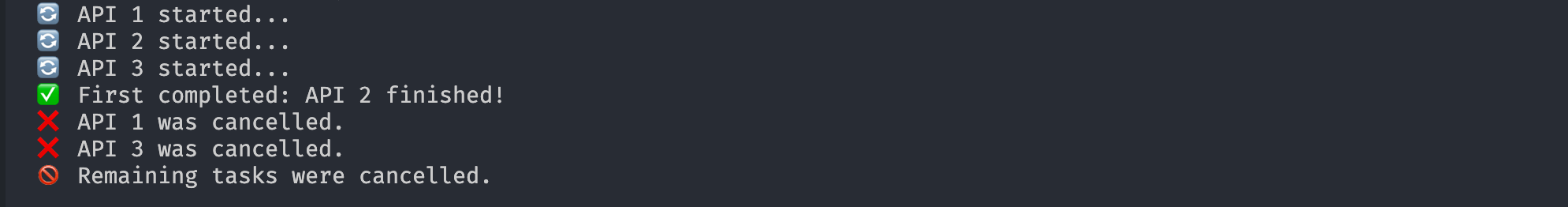

If you only need the first result and don’t want other tasks to continue wasting resources in the background, you can use CancellationTokenSource to cancel the rest of the tasks after the first one completes.

In this case, you can do the following:

using System;

using System.Collections.Generic;

using System.Threading;

using System.Threading.Tasks;

async Task Run()

{

using var cts = new CancellationTokenSource();

var task1 = SimulateApiCall("API 1", 3000, cts.Token);

var task2 = SimulateApiCall("API 2", 1000, cts.Token);

var task3 = SimulateApiCall("API 3", 2000, cts.Token);

var tasks = new[] { task1, task2, task3 };

var completed = await Task.WhenAny(tasks);

Console.WriteLine($" First completed: {await completed}");

cts.Cancel();

try

{

await Task.WhenAll(tasks);

}

catch (OperationCanceledException)

{

Console.WriteLine(" Remaining tasks were cancelled.");

}

}

async Task<string> SimulateApiCall(string name, int delay, CancellationToken token)

{

Console.WriteLine($" {name} started...");

try

{

await Task.Delay(delay, token);

return $"{name} finished!";

}

catch (OperationCanceledException)

{

Console.WriteLine($"

First completed: {await completed}");

cts.Cancel();

try

{

await Task.WhenAll(tasks);

}

catch (OperationCanceledException)

{

Console.WriteLine(" Remaining tasks were cancelled.");

}

}

async Task<string> SimulateApiCall(string name, int delay, CancellationToken token)

{

Console.WriteLine($" {name} started...");

try

{

await Task.Delay(delay, token);

return $"{name} finished!";

}

catch (OperationCanceledException)

{

Console.WriteLine($" {name} was cancelled.");

throw;

}

}

{name} was cancelled.");

throw;

}

}

So, if you run this code, you will have the following result in the terminal:

Note that the second task was the first to finish (it had the shortest delay time = 1000), in this case, the other two were canceled.

4. Task.Run

Task.Run is useful in situations where you want to execute a task asynchronously without blocking the main thread.

Use Task.Run for CPU-heavy tasks, such as complex calculations. Avoid using it for I/O operations, as ASP.NET Core already handles this.

The example below demonstrates how to use Task.Run to simulate a heavy calculation.

async Task<string> ProcessDataAsync()

{

return await Task.Run(() =>

{

// // Simulates heavy work

Thread.Sleep(20);

return "Processing completed!";

});

}

5. Combining Parallelism with Asynchrony with Parallel.ForEachAsync

Combining parallelism with asynchrony can bring several advantages, depending on the situation, since their combined use allows processing many items simultaneously (Parallel.ForEachAsync). Furthermore, this process is done without blocking threads unnecessarily, which frees up the thread while waiting for the result.

Let’s take a look at an example that combines parallelism (Parallel.ForEachAsync) with asynchrony (async/await). It will also use the ConcurrentBag<T> data type, which is ideal for purely handling thread-safe results.

async Task ExecuteAsync()

{

var userIds = Enumerable.Range(1, 100).ToList();

var processedUsers = new ConcurrentBag<string>();

var parallelOptions = new ParallelOptions

{

MaxDegreeOfParallelism = Environment.ProcessorCount

};

await Parallel.ForEachAsync(userIds, parallelOptions, async (userId, cancellationToken) =>

{

var userData = await FetchUserDataAsync(userId);

var result = await ProcessUserDataAsync(userData);

processedUsers.Add(result);

});

// At this point, 'processedUsers' contains all the results

Console.WriteLine($"Total processed users: {processedUsers.Count}");

}

private async Task<string> FetchUserDataAsync(int userId)

{

await Task.Delay(100); // Simulate I/O-bound async work

return $"UserData-{userId}";

}

private async Task<string> ProcessUserDataAsync(string userData)

{

await Task.Delay(50); // Simulate processing work

return $"Processed-{userData}";

}

Let’s analyze this code. Here, we define an asynchronous method called ExecuteAsync, which aims to process user data in parallel.

At the beginning of the method, we create a list with IDs from 1 to 100, representing the users that need to be processed. Then, we create a collection for concurrent access called processedUsers (of type ConcurrentBag, which is ideal for handling asynchronous data safely), which we’ll use to store the results of the processing of each user.

To control the number of tasks that can be executed at the same time, we use MaxDegreeOfParallelism, which is set according to the number of cores of the machine’s processor, for a balanced use of resources.

After that, we use the Parallel.ForEachAsync method to iterate through the list of IDs in parallel. For each ID, it fetches the user data asynchronously using FetchUserDataAsync and then processes that data with ProcessUserDataAsync. The result of this processing is then added to the processedUsers collection.

At the end of the execution, all user data has been processed in parallel and stored in the collection, and the total number of users processed is displayed in the console.

This way we can combine Parallel.ForEachAsync with async/await methods to take advantage of CPU + I/O resources. While parallelism (via Parallel.ForEachAsync) allows processing multiple items at the same time, using multiple threads when necessary, asynchrony (async/await) allows freeing up the thread while waiting for I/O.

Conclusion

Parallelism is a concept that provides an alternative for situations that require performance through the parallel execution of multiple tasks. When we use parallelism combined with asynchrony, we can maximize the advantages that these two approaches can bring.

In this post, we have looked at five resources currently available in ASP.NET Core to implement the concept of parallelism. Each of them has its own advantages and disadvantages and fits better in different situations.

So, I hope this post has helped you understand the concept of parallelism, its importance and how to use it in your day-to-day applications.

This content originally appeared on Telerik Blogs and was authored by Assis Zang