This content originally appeared on DEV Community and was authored by Ismail Kovvuru

Learn why running one container per pod is a Kubernetes best practice. Explore real-world fintech use cases, security benefits, and scaling advantages.

What Is a Pod in Kubernetes?

Definition:

A Pod is the smallest deployable unit in Kubernetes. It represents a single instance of a running process in your cluster.

A pod:

- Can host one or more containers

- Shares the same network namespace and storage volumes among all its containers

- Is ephemeral — meant to be created, run, and replaced automatically when needed

Principle: “One Container per Pod”

Definition:

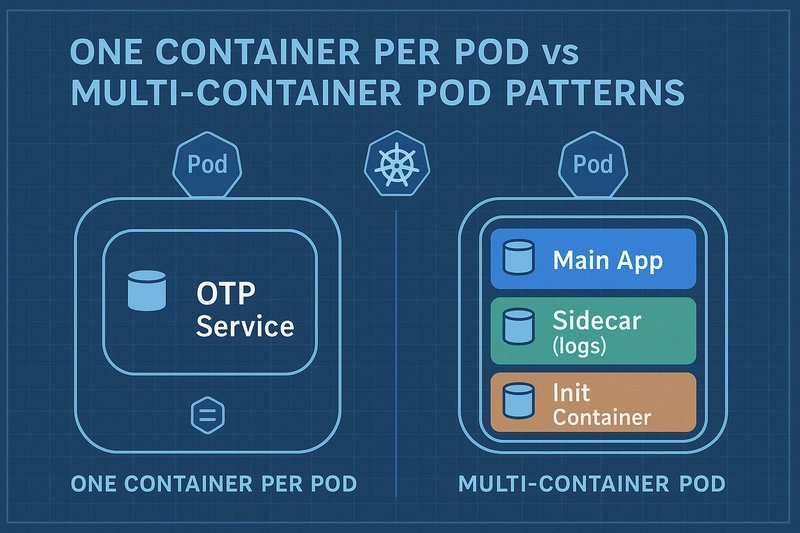

While Kubernetes supports multiple containers in one pod, the best practice is to run only one container per pod — the single responsibility principle applied at the pod level.

This principle makes each pod act like a microservice unit, cleanly isolated, focused, and independently scalable.

Why “One Container per Pod” in Production?

Isolation of Responsibility

- Each container does one job — making:

- Debugging easier

- Logging cleaner

- Ownership clear (dev vs. ops)

Scalability

You can:

- Horizontally scale pods with a single container based on CPU/RAM/load

- Apply pod autoscaling without worrying about co-packaged containers

Maintainability

- Easier CI/CD pipelines

- Smaller image sizes

- Easier to test, upgrade, or rollback individually

Fault Tolerance

If one pod/container crashes:

- Only that service is affected, not an entire coupled group

What If We Don’t Follow It?

| Problem | Consequence |

| ----------------------------- | ------------------------------------------------------------ |

| Multiple unrelated containers | Tight coupling, hard to debug & test |

| Mixed logging/monitoring | Noisy logs, ambiguous metrics |

| Co-dependency | Can’t scale or update services independently |

| Increased blast radius | One container failure could affect the whole pod’s operation |

Where and How to Use This Principle

When to Use One Container per Pod

| Situation | Use One Container? | Why |

| -------------------------------- | ------------------ | ----------------------------------------------- |

| Independent microservices | Yes | Clean design, easy to manage |

| Stateless backend or API | Yes | Scalability, fault isolation |

| Event-driven consumers | Yes | Simple, lean, retry logic handled by controller |

| Data processors (e.g., ETL jobs) | Yes | Lifecycle-bound, logs isolated |

When to Consider Multiple Containers (with Caution)

| Use Case | Type | Description |

| ----------------------- | ------------ | ----------------------------------------------- |

| Sidecar (logging/proxy) | Shared Scope | Shares volume or networking with main container |

| Init container | Pre-start | Runs setup script before app starts |

| Ambassador pattern | Gateway | Acts as proxy, often combined with service mesh |

Benefits of Using One Container per Pod

| Benefit | Description |

| ------------------ | ------------------------------------------- |

| Simpler Deployment | Easy to define and deploy YAML |

| Easier Monitoring | Logs and metrics tied to one process |

| Better DevOps Flow | Aligned with microservice CI/CD pipelines |

| Container Reuse | One container image = multiple environments |

| Rolling Updates | Zero-downtime with **Deployment strategy** |

How to Apply It in Practice

Kubernetes YAML Example (Single Container Pod):

apiVersion: v1

kind: Pod

metadata:

name: my-api

spec:

containers:

- name: api-container

image: my-api-image:v1

ports:

- containerPort: 8080

Best Applied In:

- Production microservices

- CI/CD pipelines

- Infrastructure-as-Code (IaC) like Helm, Kustomize, Terraform

- Monitoring dashboards (Grafana/Prometheus), because metrics/logs are clean

Recommendation

| Aspect | Recommendation | Reason |

| ------------------------ | ------------------------------------------------------------------------------- | ------------------------ |

| **Production Use** | Strongly Yes | Clean, scalable, secure |

| **Learning/Dev** | Yes | Easier to debug and test |

| **Multiple Containers?** | Use only with Sidecar or Init Containers when **tight coupling is intentional** | |

Breaking the Rule with Purpose: When to Use Sidecars, Init Containers, and OPA in Kubernetes

In Kubernetes, the principle of “One Container per Pod” is often recommended to maintain simplicity, separation of concerns, and ease of scaling. This approach ensures each pod does one thing well, following the Unix philosophy.

But let’s face it — production environments are complex.

There are real-world scenarios where this rule, while solid, becomes a bottleneck. For example:

- What if your application needs a logging agent running alongside it?

- What if you need to perform a setup task before the main container starts?

- What if you need to enforce security policies on what gets deployed?

This is where Kubernetes-native Pod Patterns come into play. These aren’t workarounds — they’re intentional design features, battle-tested across thousands of production clusters.

Let’s dive into these patterns.

Kubernetes Pod Design Patterns: Definitions and Purposes

Before comparing use cases, here’s a quick overview of each pattern.

Sidecar Container

A sidecar is a secondary container in the same pod as your main app. It usually provides auxiliary features like logging, monitoring, service mesh proxies (e.g., Envoy in Istio), or data sync tools.

Example: Fluent Bit running as a sidecar to ship logs to a centralized logging system like ELK or CloudWatch.

Init Container

An Init Container runs before your main application container starts. It’s used for tasks that must complete successfully before the app begins, such as waiting for a database to become available or initializing a volume.

Example: A script that pulls secrets from AWS Secrets Manager before the main app starts.

Multi-Container Pod

Sometimes, you need multiple containers to share resources (like volumes or network namespaces) and work tightly together as a single unit. Kubernetes allows this via multi-container pods.

Example: An app + proxy architecture, where a caching proxy like NGINX shares a volume with the app to serve cached assets.

OPA (Open Policy Agent)

OPA is not a pod pattern, but a Kubernetes-integrated policy engine. It runs as an admission controller and evaluates policies before allowing workloads to be deployed.

Example: Prevent deploying pods that run as root or don’t have resource limits defined.

Use Case Comparison: When to Use What?

Let’s break it down by use case and see where single-container pods hold up — and where patterns like sidecars and init containers are essential.

| Use Case | Single Container | Multi-Container (Sidecar/Init) | Why / Benefit |

| --------------------------------------- | ------------------- | --------------------------------- | ------------------------------------------------------------- |

| Simple microservice | Recommended | Not Needed | Keeps it simple, isolated, and independently scalable |

| App + logging agent | Lacking | Use Sidecar | Sidecar can ship logs (e.g., Fluent Bit, Promtail) separately |

| Pre-start setup (e.g., init database) | Not Possible | Use Init Container | Guarantees setup tasks are done before app runs |

| App with service mesh | Not Enough | Sidecar (e.g., Envoy) | Enables traffic control, mTLS, tracing via proxies |

| Deploy policy enforcement | With OPA Hook | OPA Enforced | Prevents insecure or non-compliant pod specs |

| App needs config from secrets manager | Missing Logic | Init or Sidecar | Pull secrets securely before runtime |

| Application needs tightly coupled logic | Better Separate | Can Use | Only if logic cannot be decoupled (e.g., proxy + app pair) |

The Trade-Offs You Should Be Aware Of

While these patterns are powerful, they’re not always the right answer. Some trade-offs include:

| Pattern | Pros | Cons |

| ------------------- | --------------------------------------------------- | ---------------------------------------------------------------------- |

| **Sidecar** | Modular, reusable, supports observability | Resource sharing, lifecycle management complexity |

| **Init Container** | Clean init logic, enforces sequence | Adds to pod startup latency |

| **Multi-Container** | Co-location simplifies some tightly coupled tasks | Harder to scale independently, debugging more complex |

| **OPA** | Declarative policy control, security at deploy time | Learning curve, requires policy writing and admission controller setup |

Should You Use These Patterns?

Yes — when the use case justifies it.

These patterns exist to make Kubernetes production-grade. But like any engineering decision, use them with intent, not out of trend.

Tip: Start with “One Container per Pod.” Break that rule only when there’s a clear, repeatable reason — like logging, proxying, initialization, or security enforcement.

Real-World Scenario: Banking App Traffic Spike

Problem:

A banking application has high traffic for balance inquiries. Customers now also want OTPs and transaction alerts quickly. The app starts failing under load because:

- Logging service can’t keep up

- OTP system has race conditions

- Application startup is unreliable during node restarts

Before — Problematic YAML (One Container per Pod)

balance-app.yaml:

apiVersion: v1

kind: Pod

metadata:

name: balance-app

spec:

containers:

- name: balance-app

image: mybank/balance-check:v1

ports:

- containerPort: 8080

Before-Problematic Architecture (One Container, Poor Setup)

┌────────────────────────┐

│ User (Mobile/Web) │

└────────┬───────────────┘

│

▼

┌────────────────────────────┐

│ balance-app Pod (v1) │ ← Monolithic (One container handles everything)

└────────────────────────────┘

│

├── OTP call → Fails if OTP pod isn't ready

└── Internal logs → Lost on crash

🔻 Problems:

- No readiness probe

- No log persistence

- OTP/MS failures break flow

- Crash = no trace/debug

What’s going wrong?

| Component | Issue |

| ------------------- | ---------------------------------------------------------------------------------------- |

| Logging | Logs are stored inside the container. Once the container crashes, logs are lost. |

| OTP Microservice | It runs in a separate pod. If OTP service isn't ready, the balance-app fails to connect. |

| No Retry or Wait | There’s no mechanism to wait for OTP or DB to be ready before app starts |

| Hard to Debug | You can’t access logs post-mortem or know if failures happened at startup |

After — Solved YAML (Still One Container per Pod, But Better)

*You don’t use Kubernetes patterns yet, but you improve by:

*

- Mounting logs to a persistent volume

- Adding readiness probes to avoid traffic until app is ready

- Managing environment variables for external dependencies

balance-app-fixed.yaml:

apiVersion: v1

kind: Pod

metadata:

name: balance-app

spec:

containers:

- name: balance-app

image: mybank/balance-check:v2

ports:

- containerPort: 8080

env:

- name: OTP_SERVICE_URL

value: "http://otp-service:9090"

- name: DB_HOST

value: "bank-db"

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 10

periodSeconds: 5

volumeMounts:

- name: logs

mountPath: /app/logs

volumes:

- name: logs

emptyDir: {}

After — Improved One-Container Setup (Same Pattern, Better Practice)

┌────────────────────────┐

│ User (Mobile/Web) │

└────────┬───────────────┘

│ HTTPS/API Call

▼

┌──────────────────────────────┐

│ balance-app Pod (v2) │ ← Still 1 container, but:

│ - readinessProbe │

│ - env vars for OTP & DB │

│ - volumeMounts for logs │

└────────┬───────────────┬─────┘

│ │

│ ▼

│ ┌──────────────┐

│ │ OTP Service │ ← Separate Pod

│ └──────────────┘

▼

┌──────────────────────────────┐

│ Logs Persisted (emptyDir) │ ← Logs retained on crash

└──────────────────────────────┘

Explanation of the Fixes

| Fix | Purpose |

| ---------------------------- | ----------------------------------------------------------------- |

| `readinessProbe` | Prevents traffic until the app is actually ready |

| `env` variables for OTP & DB | External service URLs are injected in a clean, reusable way |

| `volumeMounts` + `emptyDir` | Stores logs outside container file system (won’t vanish on crash) |

| Still One Container | Yes. No sidecar or init yet – just improved hygiene |

Summary

| Version | Containers | Resiliency | Logging | Service Coordination | Deployment Quality |

| ------------------ | ---------- | ---------- | --------------------- | ------------------------- | ------------------ |

| Before | 1 | Poor | Volatile | Manual, error-prone | Naïve |

| After (Improved) | 1 | Medium | Volatile but retained | Structured via ENV/Probes | Good baseline |

Real-World Scenario: Banking App Traffic Spike

- A banking application runs as a single-container Pod serving all responsibilities: balance check, OTP, logs, alerts.

- During traffic spikes (e.g., salary day, multiple users checking balance + receiving OTP), performance drops.

- OTPs are delayed, logs are dropped, CPU spikes — causing user frustration.

BEFORE: Problematic YAML (Monolithic Pod Pattern)

apiVersion: v1

kind: Pod

metadata:

name: banking-app

spec:

containers:

- name: banking-app

image: ismailcorp/banking-app:latest

ports:

- containerPort: 8080

env:

- name: OTP_ENABLED

value: "true"

- name: LOGGING_ENABLED

value: "true"

resources:

limits:

cpu: "500m"

memory: "256Mi"

What’s Wrong Here?

| Issue | Problem |

|---|---|

| Monolithic Design | OTP, logging, app logic all bundled together |

| No Scalability | Can’t scale OTP or logging independently |

| CPU Bottlenecks | OTP spikes during traffic cause app logic to slow down |

| Hard to Audit | Logs generated internally, no audit separation |

| No Policy Controls | No security policy (e.g., secrets as env vars, no resource governance) |

AFTER: Kubernetes Pattern Approach (Sidecar + Separate Deployments + OPA)

Deployment: banking-app (business logic)

apiVersion: apps/v1

kind: Deployment

metadata:

name: banking-app

spec:

replicas: 2

selector:

matchLabels:

app: banking-app

template:

metadata:

labels:

app: banking-app

spec:

containers:

- name: banking-service

image: ismailcorp/banking-app:latest

ports:

- containerPort: 8080

resources:

limits:

cpu: "300m"

memory: "256Mi"

Deployment: otp-service (Ambassador/Adapter Pattern)

apiVersion: apps/v1

kind: Deployment

metadata:

name: otp-service

spec:

replicas: 3

selector:

matchLabels:

app: otp-service

template:

metadata:

labels:

app: otp-service

spec:

containers:

- name: otp-generator

image: ismailcorp/otp-service:latest

ports:

- containerPort: 9090

resources:

limits:

cpu: "150m"

memory: "128Mi"

Sidecar: log-agent (Sidecar Pattern for Audit Logging)

apiVersion: v1

kind: Pod

metadata:

name: banking-app-with-logger

spec:

containers:

- name: banking-service

image: ismailcorp/banking-app:latest

ports:

- containerPort: 8080

- name: log-sidecar

image: fluent/fluentd:latest

volumeMounts:

- name: logs

mountPath: /var/log/app

volumes:

- name: logs

emptyDir: {}

OPA Policy: Enforce Sidecar and No Plaintext Secrets

package kubernetes.admission

deny[msg] {

input.request.kind.kind == "Pod"

not input.request.object.spec.containers[_].name == "log-sidecar"

msg := "Missing audit logging sidecar"

}

deny[msg] {

input.request.kind.kind == "Pod"

input.request.object.spec.containers[_].env[_].name == "SECRET_KEY"

msg := "Secrets should be mounted as volumes, not set as ENV"

}

This is enforced using OPA Gatekeeper integrated into the Kubernetes API server.

Service: For App & OTP

apiVersion: v1

kind: Service

metadata:

name: banking-service

spec:

selector:

app: banking-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: otp-service

spec:

selector:

app: otp-service

ports:

- protocol: TCP

port: 81

targetPort: 9090

What Did We Fix?

| Fix Area | Before (Monolithic) | After (K8s Patterns + OPA) |

|---|---|---|

| Architecture | Single Container Pod | Modular Pods with Sidecars and Deployments |

| Logging | Built-in, hard to audit | Fluentd Sidecar, independent |

| OTP Handling | Built-in | Separate OTP service (scalable) |

| Scalability | Entire pod only | OTP and app scale independently |

| Security Policies | None | Enforced via OPA (e.g., no plain secrets) |

| Compliance (e.g. PCI) | Weak | Strong audit and policy governance |

Conclusion: Which Kubernetes Pod Approach Should You Use?

Start with One Container per Pod

- Cleanest, simplest microservices structure

- Easy to debug, scale, and monitor

- Ideal for small services, MVPs, or early-stage architectures

Evolve to Kubernetes Pod Patterns as Needed

As complexity grows (especially in fintech, healthcare, or e-commerce), adopt design patterns to solve real-world needs:

- Sidecars → Add observability (e.g., logging, tracing), service mesh, proxies

- Init Containers → Ensure startup order, handle configuration or bootstrapping

- OPA/Gatekeeper Policies → Enforce security, compliance, and governance

In High-Stakes Environments (Fintech, Regulated E-Commerce): Use a Hybrid Approach

- Use single-container pods for most microservices

- Add sidecars/init containers when functionality justifies it

- Enforce policy-driven controls with OPA or Kyverno to meet compliance (e.g., PCI-DSS, RBI, HIPAA)

Trade-off Summary

| Approach | Best For | Trade-Offs / Complexity |

|---|---|---|

| One Container per Pod | Simplicity, scalability | Lacks orchestration logic |

| Pod Patterns (Sidecar, Init) | Logging, proxying, workflows | More YAML, more coordination |

| OPA/Gatekeeper Policies | Compliance, audit, guardrails | Requires policy authoring skills |

Final Advice:

Build simple, evolve with patterns, and secure with policy as your system matures.

This content originally appeared on DEV Community and was authored by Ismail Kovvuru