This content originally appeared on DEV Community and was authored by Flavius Dinu

This is a submission for the Pulumi Deploy and Document Challenge: Fast Static Website Deployment

What I Built

For this challenge, I’ve built a simple static website based on Docusaurus for tutorials and blog posts. As I’m not too seasoned with Frontend development, I only made small changes to the template, and added some very simple blog posts and tutorials there.

To make this more interesting, I’ve decided to build the infrastructure in both Python and JavaScript using Pulumi, and deploy the websites to different S3 buckets and subdomains.

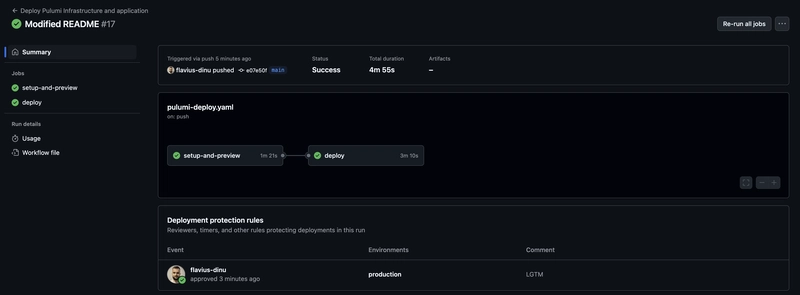

The Python Pulumi code is deployed with GitHub Actions. This leverages static credentials for AWS embedded as repository secrets. I have implemented two workflows:

- One for the actual deployment of the Pulumi Python code and the application called

pulumi-deploy. This one runs on push to the main branch, but it first does apulumi previewand waits for manual approval to do apulumi up - Another one for removing the application from S3 and destroying the infrastructure called

pulumi-destroy. This one will run manually

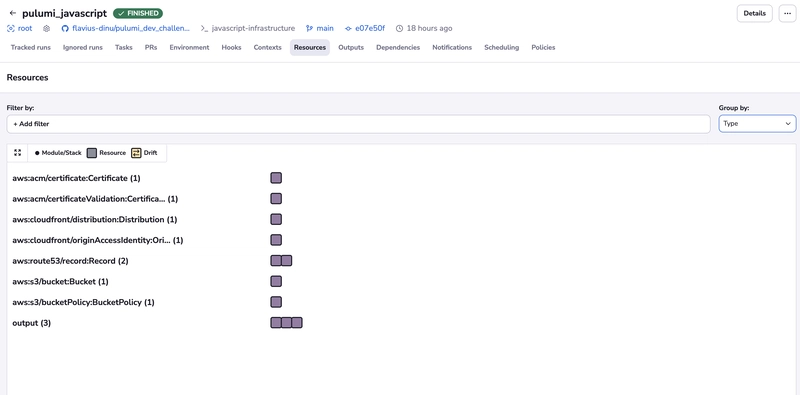

The JavaScript Pulumi code is deployed with Spacelift and leverages dynamic credentials based on Spacelift’s cloud integration for AWS.

Live Demo Link

Even though it is the same website, it is deployed differently as I’ve mentioned above, thus it is using different subdomains:

Project Repo

Deploying a static website with Pulumi

The aim of this project is to showcase how to deploy a static website built using Docusaurus by using:

- Pulumi (python) and GitHub Actions

- Pulumi (javascript) and Spacelift

Repo structure explained:

- .github — GitHub Actions workflows for deploying the Pulumi python code

- docs — Documentation website built with Docusaurus, that can easily host your blogs and tutorials

- javascript-infrastructure — Pulumi javascript infrastructure that will be used by Spacelift to deploy the infrastructure and the application

- python-infrastructure — Pulumi python infrastructure that will be used by Spacelift to deploy the infrastructure and the application

- images — Images used throughout the documentation

To use this project, feel free to fork the repository and follow the instructions below.

Prerequisites

- An AWS Route53 hosted zone for your domain

- An AWS S3 bucket for Pulumi state hosting

- An AWS role for building dynamic credentials for Spacelift

How to create

…Ensure you check the README.md to understand how to use the automation.

My Journey

Initially, I started this challenge by thinking about what kind of static website I would implement. I was actually debating between making a newsletter or creating a blog/tutorials website, the latter ending up being the right choice for me.

As I’m by no means a frontend developer, I’ve started researching what would be the best framework to achieve this based on my limited experience, and it seemed like Docusaurus was the easiest choice for me. Again, this is very subjective, so I would encourage everyone to do their own research before building something like this, especially if this is not your day-to-day.

I followed Docusaurus documentation for how to initialize the project, and then I deployed the website locally to see how it looks like. After diving into the repository template, I found some of the things I wanted to change pretty easily:

- How to add an author to website

- How to add social links

- Where to change the footer

- How to add new articles

For some of the other things, I have done a lot of “grep -ri insert_whatever” inside the repository to view what are the locations in which something is used and I made changes to reflect my view.

Now that I was done with the website, I’ve decided what I will do for the static hosting. I’ve debated between using other Cloud providers than the ones I’m accustomed to (AWS, Azure, Oracle Cloud), but as I already had my domain registered in AWS Route53, I ended up choosing AWS.

Next, I had to decide what services I would use so I chose:

- S3 bucket for static content storage

- CloudFront distribution for content delivery

- ACM certificate for HTTPS for DNS validation

- Route53 DNS configuration: 1 record for ACM certificate validation and an A record pointing to the CloudFront distribution

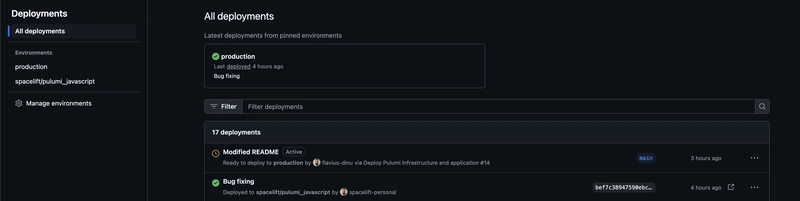

Then I built the initial version of the infrastructure using Pulumi with Python, and I decided to deploy it via Spacelift. Then I thought to myself, wouldn’t it be more interesting to explore a different way to build the infrastructure and to deploy it? So I ended up with two versions of the infrastructure, one implemented in Python that I decided to deploy, in the end, with GitHub Actions, and another one implemented in Javascript which I ended up deploying with Spacelift.

By having two deployment options, I could easily see Pulumi in action and how the process is different when using a generic CI/CD and an Infrastructure Orchestration platform. It took me a couple of hours to build the GitHub Actions CI/CD pipelines, but with Spacelift I was done in less than an hour.

I could’ve easily just keep a single version of the infrastructure, but I wanted the work to be more challenging, and that’s why I went for multiple versions.

During this process, I faced a couple of issues, but the most important thing that I’ve learned is the fact that CloudFront only accepts ACM certificates from the us-east-1 region.

This made me waste some time, as I couldn’t really understand why that was happening. So to make this clear for everyone, this occurs because even though CloudFront is a global service, its configuration and metadata are managed centrally in us-east-1. By requiring certificates in us-east-1, AWS avoids the complexity of doing certification syncs across regions. I’m sure this is in the study material for multiple AWS certifications, but as I’m not using CloudFront too much, it really slipped my mind.

Using Pulumi

I used Pulumi to define and deploy the necessary infrastructure for a static website in AWS. As Pulumi is versatile, it made it easy for me to build my infrastructure resources in the programming languages I like to use.

It was very easy to set up the boilerplates for both the Python and Javascript examples by running:

pulumi new pythonpulumi new javascript

After following the prompts, I started the actual development of the Python project.

Pulumi helped me easily:

- create an S3 bucket with website hosting enabled and tags

- use a CloudFront distribution to serve content securely, ensure only CloudFront can access the S3 bucket using Origin Access Identity, and enable HTTPS using ACM-issued certificates

- implement DNS Configuration with Route53 to retrieve the existing hosted zone and create the necessary DNS records

Why was Pulumi Beneficial

- Multi-Language Support – I implemented the same infrastructure twice using Python and JavaScript which can be useful for teams that have different backgrounds

- Resource Management with code – Pulumi lets you take advantage of programming capabilities (loops, conditionals) to dynamically configure resources; Using functions such as the

applyfunction makes it easy to handle dependencies - Easy to use by developers – My background is in DevOps engineering, so I’ve seen firsthand the issues developers have with DevOps processes. Without self-service, developer velocity is affected, but by leveraging something that developers know, the impact is lower.

- Stateful approach – Pulumi’s stateful nature makes it easy to detect changes, manage dependencies, and ensure idempotency

Future developments

For this small project, I believe the infrastructure code is in good shape, but it would be beneficial to take advantage of some of the capabilities infrastructure orchestration platforms offer out of the box like:

- Policy as code – Policies could be implemented to restrict the Cloudfront distribution’s Price class, require multiple approvals for runs, and ensure you are notified in your Slack/MS teams about failed runs

- Drift detection – Drift happens, so why let it ruin your application? Implementing drift detection and remediation can be a lifesaver when it comes to ensuring infrastructure reliability and resilience

- Self-service – Developers should be able to deploy resources on demand while staying safe

- Secrets management – Use a specialized secrets management solution such as HashiCorp Vault, OpenBao, or Pulumi ESC to ensure secrets are securely stored and encrypted

- Write unit tests – Capture issues before applying the code

- Integrate security vulnerability scanning – Ensure you are not deploying code with vulnerabilities: use Checkov, Kics, or other specialized solutions for that

In addition to this, we can explore other deployment options, such as Pulumi Cloud, use other programming languages to build the infrastructure, and even choose other cloud providers.

Key points

This was a very fun challenge that had real-world applicability. I believe Pulumi’s flexibility makes it a powerful choice for managing cloud infrastructure, especially where teams prefer using programming languages.

Pulumi integrates with ease with many CI/CDs and Infrastructure Orchestration Platforms to automate deployments, minimize human errors and implement governance.

It was a great opportunity to use Pulumi in different contexts and leverage modern DevOps technologies to steamline cloud infrastructure management.

Keep building!

This content originally appeared on DEV Community and was authored by Flavius Dinu