This content originally appeared on DEV Community and was authored by gus

A.S.T.R.A – Autonomous Synthetic Thought Response Animus. – WIP

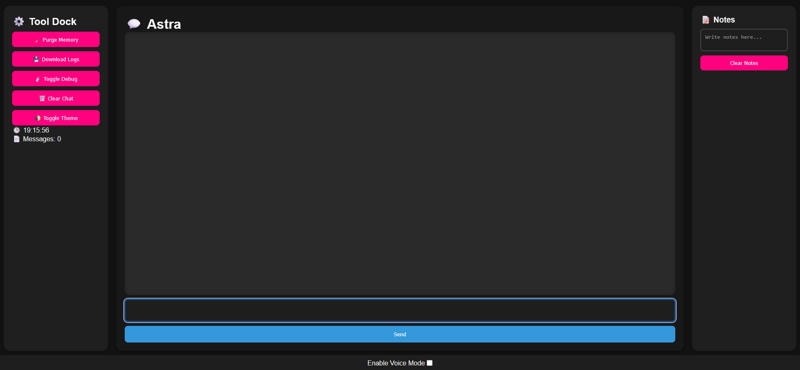

A.S.T.R.A is a project focused on creating a fully local, voice-enabled AI assistant that listens, speaks, remembers, and evolves with the user—acting more like a synthetic consciousness than a typical chatbot.

Managing thought, tasks, and mental load digitally can become overwhelming—most AI tools act like single-use prompts with no memory or personality. A.S.T.R.A (Autonomous Synthetic Thought Response Animus) aims to change that by serving as a self-hosted assistant that communicates naturally, recalls context, and adapts to your style over time. Whether through text or voice, Astra is always present and ready.

Features Implemented So Far

Streaming AI Chat via

Streaming AI Chat via llama3running on Ollama Voice Input using

Voice Input using Whisperfor local speech-to-text Voice Output using

Voice Output using gTTSorPiper Auto Voice Looping – Astra listens again after speaking

Auto Voice Looping – Astra listens again after speaking Frontend Chat UI with:

Frontend Chat UI with:

- Markdown support

- Syntax highlighting

- Copy-to-clipboard buttons

- Tool dock with status indicators

Memory System:

Memory System:

- Short-term memory

- Running summaries

- Long-term text-based recall

- Defined personality prompt logic

Current Planned Future Enhancements

- [ ] Vector-based Long-Term Memory (RAG-style context recall)

- [ ] Plugin System via StruktAI (External tools, commands, APIs)

- [ ] Voice Persona Switching (Dynamic TTS voices)

- [ ] Memory Browser Interface (UI for reviewing context)

- [ ] Full Docker Support (Self-contained deployment)

- [ ] Interrupt Detection (Mid-reply cut-off awareness)

Why A.S.T.R.A?

The goal of Astra is to blend human-like presence with structured thought. It doesn’t just talk, it listens, recalls, and reflects. Astra is designed to reduce digital friction in daily life by managing context, remembering projects, and interacting through both voice and text.

It acts more like a personal operating system for your mind than a chatbot in a window. Built for speed, privacy, and real interaction-without relying on external APIs unless you want to.

The Current State Of Things?

As of writing this, Astra is in an alpha phase with live voice input/output, a working streaming backend using Ollama, and a dynamic frontend interface. Whisper handles voice input, gTTS or Piper generates audio replies, and Astra loops back into listening automatically after each interaction.

Development is currently focused on extending Astra’s plugin architecture, enhancing long-term memory, and building a tighter integration with the StruktAI framework.

Astra is designed for people who want more than just answers-they want interaction that adapts and evolves.

Stay tuned, she’s growing.

Tahl0s

/

autonomous-synthetic-thought-response-animus

Tahl0s

/

autonomous-synthetic-thought-response-animus

A “consciousness” born from code. Trained to listen. Designed to evolve.

A.S.T.R.A. – WIP

A.S.T.R.A. – WIP

autonomous-synthetic-thought-response-animus

Autonomous Synthetic Thought Response Animus

A “consciousness” born from code. Trained to listen. Designed to evolve.

A self-hosted voice-enabled AI assistant that listens, speaks, remembers, and evolves.

Features

Features

Streaming AI Chat via

Streaming AI Chat via llama3(Ollama backend) Markdown support with custom formatting logic

Markdown support with custom formatting logic Voice input using

Voice input using Whisper(local STT) Voice output using

Voice output using gTTS(Google Text-to-Speech) Auto-resume listening after responses

Auto-resume listening after responses Copy button for all AI replies

Copy button for all AI replies Real-time timestamp, message counter, and tool dock

Real-time timestamp, message counter, and tool dock

Tech Stack

Tech Stack

- Frontend: HTML, CSS, JavaScript, Web APIs (Speech, Clipboard)

-

Backend: Python (Flask), LangChain, Ollama (

llama3), Whisper, gTTS - Memory: JSON + TXT file-based context system

Memory Structure

Memory Structure

| File | Purpose |

|---|---|

chat_log.json |

Stores last N interactions |

chat_summary.txt |

Running summary for context |

lt_summary_history.txt |

Rotating summary history |

long_term_memory.txt |

Condensed facts & user traits |

personality.txt |

Defines Astra’s tone and decision logic |

Setup Instructions

Setup Instructions

1. Install Requirements

pip…

This content originally appeared on DEV Community and was authored by gus